System Design - Cache

Cache is short term memory which has limited space but is faster. It is based on the principle that recently requested data is likely to be requested again. Cache could be added in almost every layer, but is often found nearest to the front end, e.g., browsers.

Application server cache

Cache is placed at each of the server node, and each cache is only responsible for the data from its dedicated server. However, with load balancer, the request could be sent to any node, which increases cache miss. Distribute cache & global cache could overcome this.

Distributed cache:

Each of server node own part of cached data. Request is been hashed to the particular node. So the same request goes to the same node.

Global cache

All node uses the same cache space.

CDN – Content distribution network

Ask CDN for content first.

Cache Invalidation

When data is modified in database, it should be invalidated in the cache.

Cache update policy

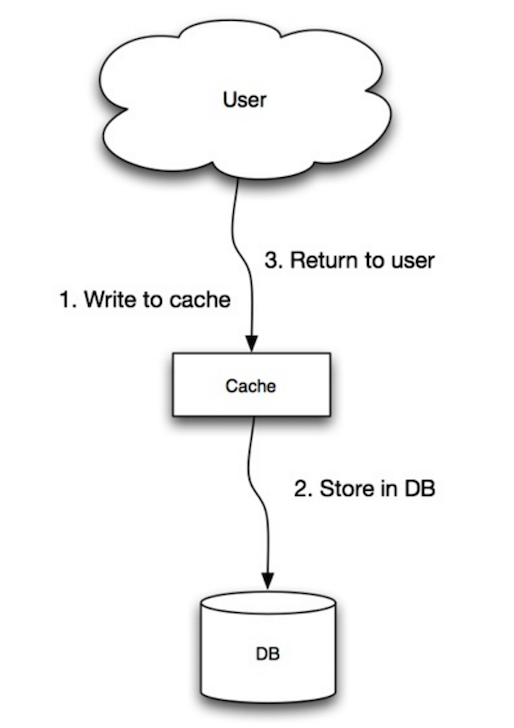

Write-through Cache

Data is written into the cache only. Cache is responsible for writing to DB. Cache and DB are a single datastore to the users. Advantages: minimize the risk of data loss; Disadvantage: Higher latency for write operation.

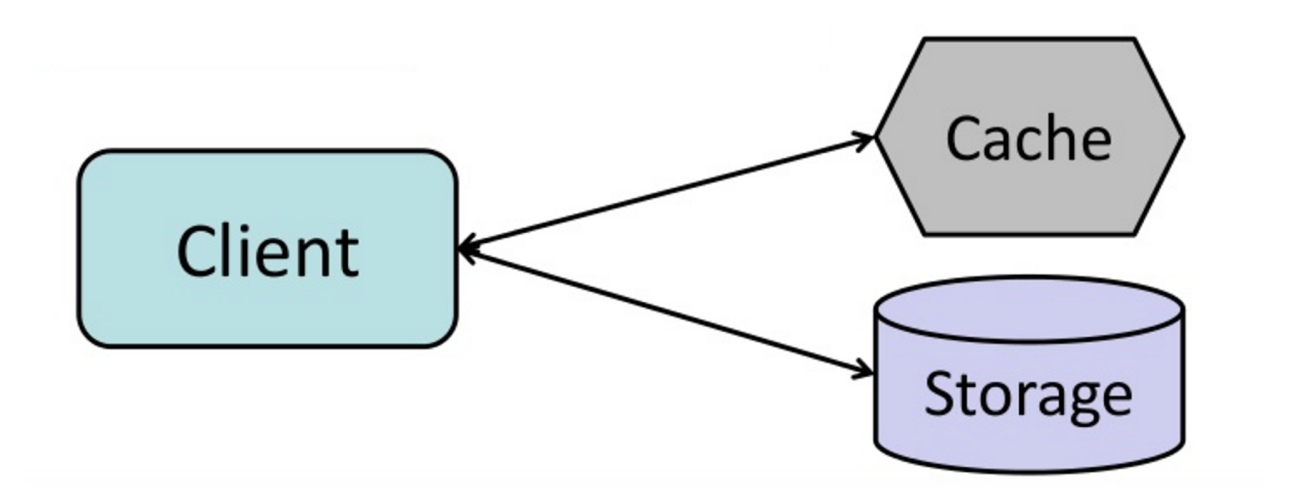

Write Around Cache (Cache aside)

Data is written directly to DB, by pass the cache.

Advantage: reduced write operation in cache;

Disadvantage: newly written data creates a cache miss and need to be read from back-end.

Read: look for entry in cache, if missing, load entry from the database, and add the entry to cache. Data can become stale if it is updated in the database and not in the cache. Add TTL to mitigate this problem, i.e., after TTL, the data in the cache is invalidated.

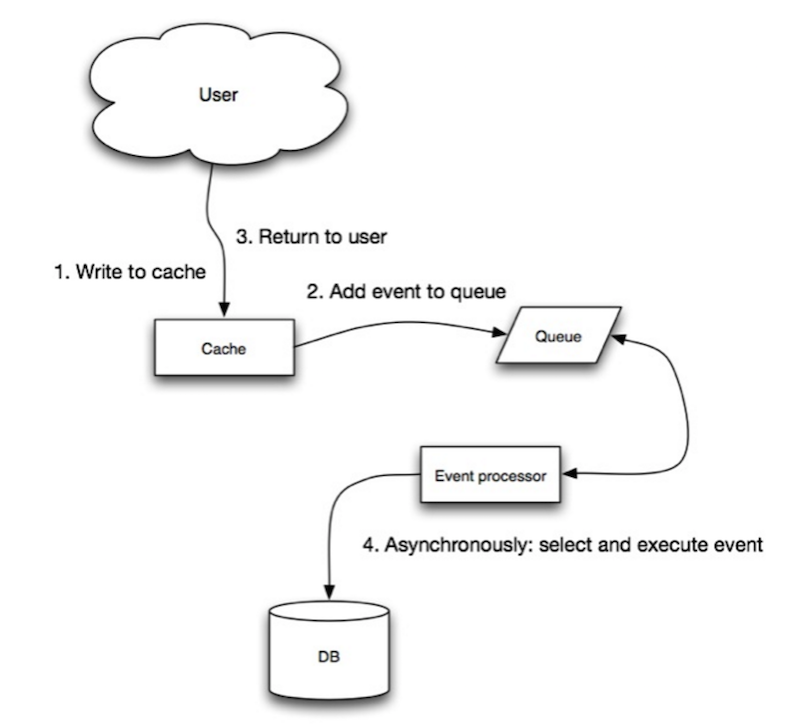

Write Back Cache

Data is written to cache along, write is put to queue. Risk of data loss in case of crash.

Cache Eviction Polices

FIFO LIFO LRU LFU Most recently used Random Replacement